Google I/O returned in May 2025 with a flood of announcements, and yes, the hype was justified.

The event, held at the Shoreline Amphitheatre in Mountain View on May 20–21, showcased Google’s biggest leaps in AI, mobile, and hardware yet.

If you haven’t had the opportunity to watch the full keynotes, you’re in the right place to learn the latest news.

So, what did Google reveal at I/O 2025?

Across two days of keynotes and sessions, we saw Android 15, the next-gen Gemini model, and a lineup of AI-first devices take center stage.

Sundar Pichai opened the show by spotlighting the latest news from Google, which focused on the rapid progress in artificial intelligence and user experience.

And unlike last year’s I/O, where many features were still experimental, this year was all about delivery. These were polished, production-ready tools rolling out at scale.

From Android 15’s privacy upgrades to AI Mode in Google Search and new Pixel hardware, I/O 2025 made one thing loud and clear: AI isn’t just part of Google’s ecosystem anymore. It is the ecosystem.

TL;DR:

- Google I/O 2025 introduced major AI upgrades across Android, Search, Workspace, and hardware

- Android 15 features better multitasking, smarter UI, and new privacy tools like Private Space and AI-powered theft detection

- Gemini 2.5 Pro powers new tools like Smart Reply in Gmail and AI Overviews in Search, now live for over 1.5 billion users

- The Pixel Fold 2 and Pixel 9 showcase AI-first hardware with upgraded cameras and real-time features like Magic Editor

- Workspace apps like Docs and Gmail now generate replies, summaries, and visuals using Gemini AI

- Developers get access to Gemini 2.5 through Vertex AI, with tools like Firebase AI Logic and Gemini-powered Android Studio

- Project Astra and AI Mode preview a future of camera-based search and ambient AI assistants across devices

- Google’s message is clear: AI is not a feature, it’s the foundation of how users search, work, and interact across the web

New Features in Android 15 From I/O 2025

Android 15 made its debut with a focus on speed, intelligence, and a sleeker, more personalized interface.

Google’s updates include better multitasking on large screens and a new Material 3 Expressive design language that makes the UI feel more dynamic and tailored for you.

Underneath, the OS is sharper at predicting what you’ll do next and runs smoother overall, a clear step forward in Android’s performance polish.

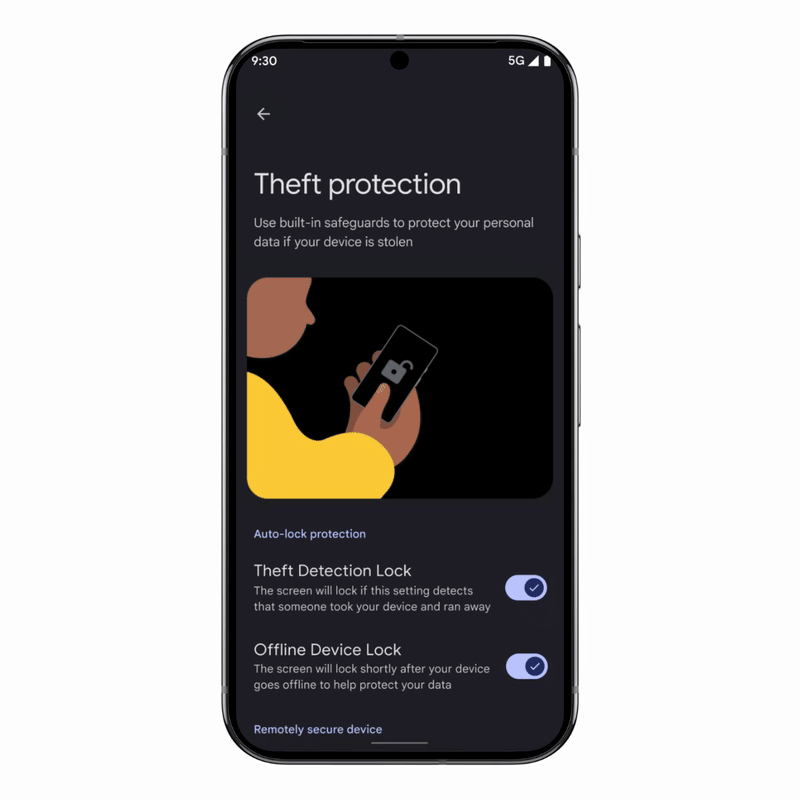

One of the most important latest news from Google is that privacy also got a serious upgrade. Android 15 introduces Private Space, a secure zone that locks sensitive apps behind extra authentication.

Source: Google

And thanks to AI-powered anti-theft tech, your phone can detect if it’s stolen and lock itself automatically, while key privacy settings now require identity verification.

Source: Google

Android 15 also infuses more on-device AI into the user experience.

The OS leverages context and learning to provide proactive suggestions, and Google has added new AI-driven tricks like “Circle to Search” for instant visual search assistance on your screen.

From smarter Smart Replies to predictive app actions, Google is making Android more context-aware and personalized. Your phone can now better predict what you need before you even tap.

Gemini and AI Developments

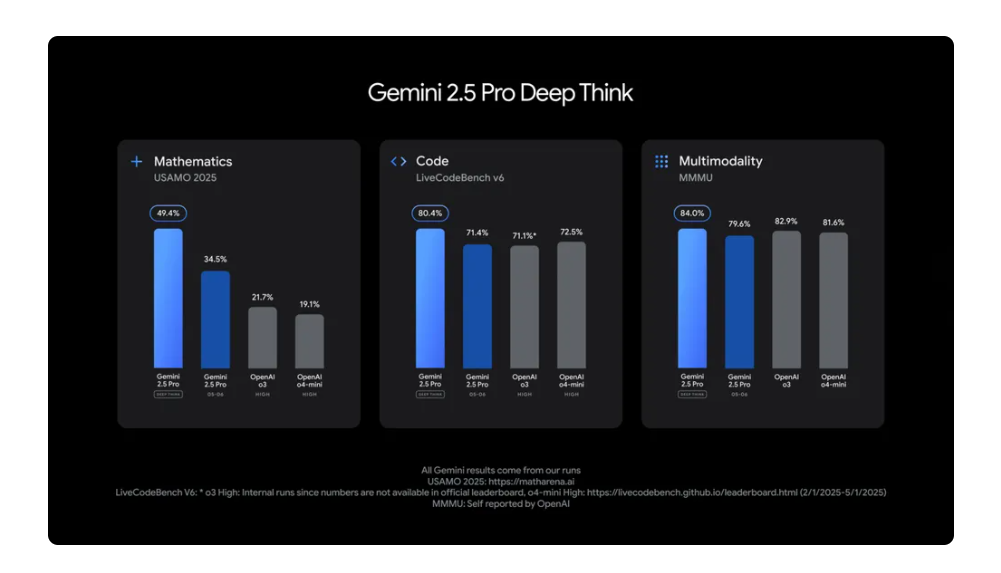

AI took the spotlight at Google I/O 2025, with Gemini 2.5 Pro leading the charge.

The upgraded model brings sharper reasoning, faster response times, and support for longer context windows, meaning it can understand and process more information in one go.

A new “Deep Think” mode lets Gemini evaluate multiple possible answers before responding, giving it a more thoughtful, problem-solving edge.

Source: Google

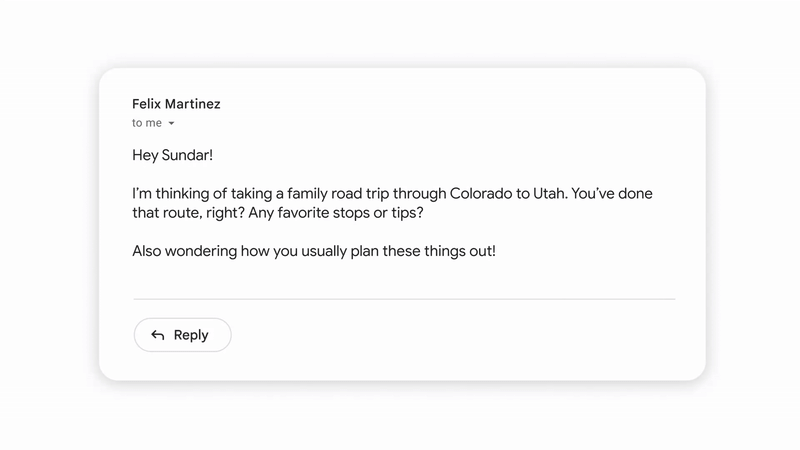

Google isn’t just improving Gemini, it’s embedding it deeper into daily life. Gmail now offers Smart Replies tailored to your writing style, pulling context from past emails and Drive files.

The Gemini app is morphing into a true personal assistant, with 400+ million users and new features like Gemini Live, which can see your screen or camera in real time and soon connect with Google Maps and Calendar for on-the-fly assistance.

Source: Google

Search is also evolving. AI Overviews, powered by Gemini, is moving from being an experiment to becoming a core feature, now live for over 1.5 billion users.

You’ll see cited answers, context-aware suggestions, and in the U.S., a new AI Mode that supports complex queries, follow-ups, and even live visual searches with your camera. Google’s universal assistant is starting to feel very real.

Source: Google

Google Cloud and Workspace Tools for Developers

Google I/O 2025 wasn’t just about consumer features; it delivered big news for developers, especially in cloud and Workspace integration.

Google showcased how Gemini-powered AI is supercharging its Workspace apps: it enables developers to use generative AI to draft and edit in Docs, generate formulas or summaries in Sheets, and even have Gmail compose emails for you with a click (“Help me write” on mobile).

Source: Google

In Google Meet, the new Duet AI features can automatically create meeting summaries and action items, or even “attend” a meeting on your behalf and send you a recap.

All these tools work in unison. For instance, an AI-generated brainstorming doc can be shared in Meet, and Gmail’s replies will soon leverage context from your Calendar and Docs to sound more like you, demonstrating cross-tool synergy in Workspace.

The Developer Angle: SDKs, APIs, and Project Tools

For developers, Project IDX emerged from preview with major enhancements.

Now folded into Firebase Studio, it provides a cloud-based, AI-driven coding environment accessible entirely in the browser.

This means you can import a repo or Figma design, spin up an IDE, and get real-time coding assistance from Google’s Gemini models – all without local setup.

The platform supports live collaboration (think Google Docs for code) and has an AI pair programmer that can generate code, answer questions, and even build app UIs from natural language prompts.

Google demonstrated how you can describe an app idea and let the AI App Prototyping agent scaffold the project, create UI elements, and suggest backend services, drastically cutting dev time.

Developers also gained new APIs and SDKs to tap into Google’s AI might.

Gemini API and Vertex AI services (once limited to enterprise) are now more accessible to smaller dev teams, allowing them to integrate Google’s large models into their own apps for text, image, and code generation.

Google introduced Jules, an autonomous coding agent that can write tests or fix bugs asynchronously while you work on other tasks.

On the design side, Flutter’s new Stitch tool can turn sketches or prompts into full interface layouts, and the updated Material 3 Expressive widgets make it easy to reflect Android 15’s design system out of the box.

But the real headline? Wider access to Gemini 2.5 via the Vertex AI platform.

Both Flash and Pro versions are now open to devs of all sizes, and Gemini Code Assist is free in major IDEs. That means indie builders get the same AI muscle as big tech and the freedom to create smarter, faster, and at scale. Google’s giving devs the keys to build what’s next.

Just launched a new AI-first product or feature?

Run it through our Website SEO Grader and see how well it’s optimized for Google’s evolving Search and AI ranking signals.

What It Means for Users and the Tech Industry

One thing’s clear whether you get to watch the full keynotes or learn the latest news through us. After I/O 2025, Google isn’t playing catch-up. It’s trying to lead the AI race outright. With deep integrations across hardware (Tensor chips) and software (Search, Workspace, and beyond), Google is building an AI ecosystem that its rivals will struggle to match.

AI features like Gemini’s advanced reasoning and Search’s AI Mode aren’t tech demos; they’re a direct shot at ChatGPT, Bing AI, and whatever Apple’s cooking in the background.

For everyday users, that means a web experience that feels faster, more intuitive, and increasingly hands-free.

Search will give cited, conversational answers. You’ll be able to try on products virtually, follow up with live questions at dedicated forums, and get smarter results without hopping between apps.

Google is betting that users will come to expect this kind of intuitive, AI-as-a-feature everywhere, and it’s probably right.

Companies will need to optimize for AI-driven search visibility (e.g., ensuring their content is cited in AI overviews) and adapt to new user behaviors (fewer clicks on “10 blue links” and more voice/image queries).

From an industry perspective, Google is effectively saying that the era of static results and manual app usage is ending, and those who don’t innovate with AI will be left behind.

Wondering how your brand fits into Google’s AI-first ecosystem?

Book a free discovery call with our SEO experts. We’ll help tech teams audit post-I/O opportunities and adapt quickly.

Google’s Vision for the Future

At I/O 2025, Google laid out its vision of the future: ambient computing with AI infused at every layer.

CEO Sundar Pichai described a world where technology is intuitive, proactive, and fades into the background, whether you’re on a phone, watching YouTube, or wearing AR glasses.

That vision comes to life with AI features like Gemini Live, which lets users “search what they see” using their camera in real time, and Android XR projects that bring AI directly into wearable devices.

But Google isn’t just chasing capability, it’s also prioritizing ethics, accessibility, and sustainability in its AI rollout.

The latest content-generation AI models, Imagen 4 and Veo 3, now include automatic watermarking and built-in safety filters to promote responsible use of AI visuals.

Throughout the event, Google emphasized the need for responsible AI governance. That means processing data on-device where possible, encrypting sensitive information end-to-end, and — crucially — deploying AI in ways that empower rather than replace people.

A Strategic Reset

Google I/O 2025 wasn’t just a product update; it was a strategic reset for the AI era. Android 15 pushes mobile privacy and performance forward. Gemini AI models are now embedded in everything from Search to Workspace.

And Google’s hardware lineup proves it’s serious about delivering AI-first experiences across every screen.

For marketers, SEOs, and developers, the message is clear: AI is now the fabric of search, not just a feature.

That means rethinking content strategies for generative answers, citation-driven visibility, and more natural, contextual interactions. SEO isn’t just about blue links anymore; it’s about being the trusted source AI pulls from.

Developers, meanwhile, now have access to powerful tools once reserved for Big Tech. With APIs like Gemini and platforms like Firebase AI Logic, small teams can build world-class products with fewer barriers.

Business owners should also take note of and learn the latest news the industry has to offer. AI is baked into every user touchpoint, and smart adoption means more efficient workflows, better customer experiences, and faster innovation.

We’re officially in the era of Search Everywhere and AI Everywhere. The brands that lean into this shift and optimize across platforms, formats, and contexts will lead.

Want to stay ahead of Google’s AI shifts?

Download our free Search Everywhere Optimization Guide, it’s your step-by-step playbook for ranking in AI-powered, multi-device search.

Leave a Reply